Gaming at 4K and 60fps (or higher) has been the holy grail for quite a while now. Sure, 4K displays have been widely available for a number of years, but the necessary hardware to drive those displays has been cost prohibitive. Well, until very recently at least. Yet those crisp, true to life visuals it provides, along with that juicy support for things like HDR, is just too tempting to give up. However, the single minded drive towards UHD gaming is not only a bit pointless for most gamers, but a huge waste of money, energy, and computing resources.

First off, when we’re talking 4K, what we really mean is UHDTV. Which has a resolution of 3840 × 2160. True 4K is a slightly wider format used for cinema, but we don’t need to worry about that here.

Now, this resolution wasn’t chosen arbitrarily. 2160 is evenly divisible by the two previous high definition formats, providing four times the resolution of 1080p, and six times that of 720p. Allowing those images to perfectly scale across the screen with no black magic trickery involved, unlike what’s needed with standard definition content. What this means is that older HD content still looks good unless you’re really close to the screen, or have a really large display. And that’s the secret sauce here that renders native 4K pointless for most people.

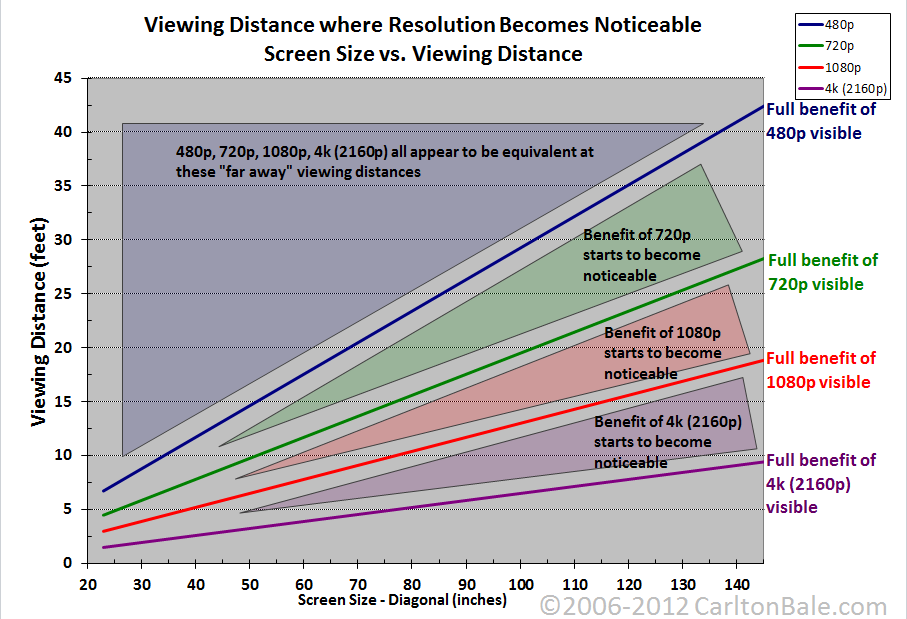

So there’s this chart by Carlton Bale that’s been floating around for a few years now. It compares viewing distance with display size, showing weather 4K is worth it. See, the issue here is not so much the total, resolution but pixel density. The human eye is an analogue device, so it doesn’t have a fixed resolution per se. But it does have its limitations. Once you get far enough away from a display, you’ll eventually get to the point where its impossible to resolve the individual pixels. This is the reason why Apple has never put anything higher than a QXGA display in their 10” iPads. You’re eventually going to hit a law of diminishing returns, where higher resolutions have little to no perceivable effect in people with 20/20 vision.

According to Bale’s handy chart, at a typical viewing distance of 10ft,, one would need an 85” television in order to simply start seeing the benefits of 4K over 1080p. At 15 feet, the TV would have to be 120” in size. To get the full benefit of 4K, you’d need a far larger display still. Well over 140”. Which is probably going to be too large and too cost prohibitive your your typical ham and egg consumer. In fact, you can’t even walk into a Best Buy to get a display that large. I know, I checked. 85” is where they top out unless you go for projectors or some ultra expensive specialty sets.

Now, I’m not sure what science Bale used to determine these numbers. However, the cart seems to be widely regarded as accurate. Basically, for a 65” display, which most household TVs seem to top out at these days, there’s really no difference between 4K and 1080p at typical viewing distances. In fact the six best selling 4K TVs listed on Amazon are all considerably smaller, at about 43-inches on average. At those sizes, you would need to be sitting three feet away to get the full effect of 4K. Something which I can personally vouch for, as I have one of those in my bedroom. I cannot tell the difference between 1080p and 4K at my usual sitting distance of 8-feet.

Your devices doen’t really care about any of this, however. If you set your console or PC to output at 4K, it’s going to render scenes at the full 2160p. The problem here is that your device now has to process four times the information as a 1080p image. Which requires substantially more GPU power, for what is ultimately going to result in very little benefit for most living room setups.

4K/60 capable consoles and GPUs are expensive, and consume a lot more electricity, than those limited to 1080p/60. Which results in more heat, higher energy bills, and really isn’t all to friendly to the polar bears, as Jeremy Clarkson likes to say. It also means that the same GPU is wasting computing resources rendering a pointlessly high resolution, when it could be working on making the scene more detailed, or pushing higher frame rates. In fact, you’re probably going to notice a much bigger difference moving to HFR modes rather than going from 1080p to 4K. Especially if you’re a console gamer who’s used to playing at 30fps for decades.

Now, you may be wondering about other 4K features like HDR and 10-bit colour depths. Well, those certainly do make a difference, and are usually features exclusive to 4K sets. But your device doesn’t need to be outputting at 4K to utilize them. They’ll still work at 1080p. So for console gamers, who primarily play in living rooms on typical consumer televisions, 4K is little more than marketing fluff. Much like ray-tracing, which RDNA2 GPUs are notoriously bad at. And we won’t even discuss how silly 8K is.

PC gaming is a bit of a different beast though. If you’re playing at a desk rather than on a TV, then yes, you will see a benefit to 4K at monitor sizes above 25”. But there are plenty of other things to consider. Generally speaking, you’ll need something upwards of an RTX 3080 or 6800 XT to get that full fat 4K/60fps experience. Well, in newer games anyway. Unfortunately, 4K capable GPUs are very expensive, and were even before the chip shortage. Given that your average 4K/60 capable GPU runs as much as much as both the Series X and PS5 combined, you’re really hitting that wall of diminishing returns when it comes to newer games.

New techniques like DLSS and FSR have emerged that can fudge 4K to a pretty respectable level through the use of AI upscaling. So if you absolutely do have to play on a 4K monitor, there are options for cheaper GPUs. Though these options are largely on a game by game basis rather than universal. 1440p displays tend to offer a more cost effective happy medium. Especially those capable of supporting high refresh rates. Meaning that you can get away with an almost or even just-as good experience as true 4K, without breaking the bank.